By Lloyd Lewis

Introduction

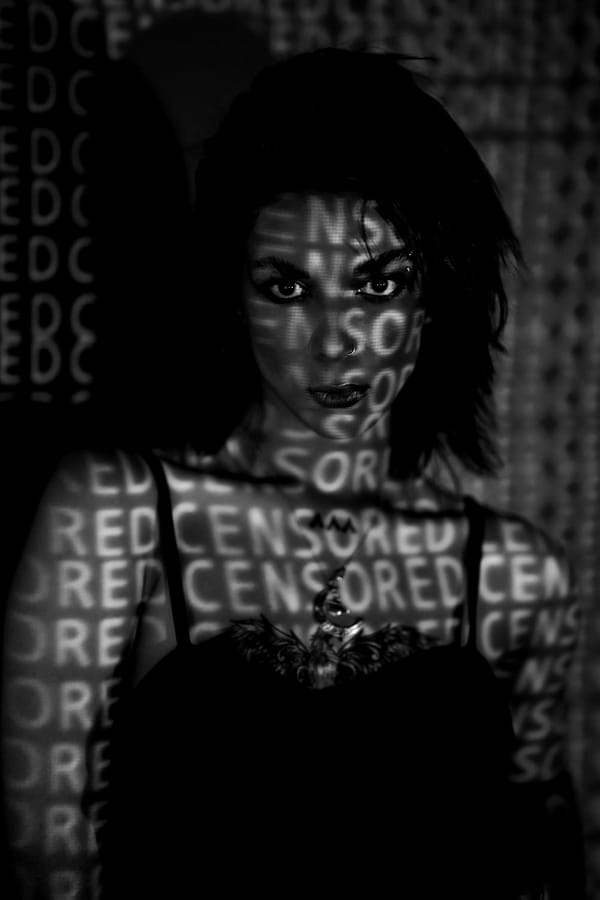

This is the first in a three-part series of essays under the title ‘Identity in the Age of AI’. These are not academic papers in the formal sense but academic-themed inquiries: attempts to gather references, ask questions, and reflect on what it means to exist in a time when every face has already become a dataset.

This essay explores the paradox of the digital footprint, the rise of deepfake pornography, and the legislative response of the UK’s Online Safety Act (OSA). Later essays will examine the political economy of synthetic media and the question of how individuals might survive in the age of the synthetic self.

By 2025, almost every individual with internet access carries a digital footprint so vast and dispersed that it can be reconstructed by machine learning systems without their knowledge or consent. The rise of generative AI has accelerated the capacity to turn this footprint into hyper-realistic images, voices, and even videos. What once required Hollywood budgets now requires little more than a consumer-grade graphics card, an open-source model, and a scraped dataset.

This capacity has collided with a different, slower form of governance: legislation such as the UK’s Online Safety Act (OSA), which from 2025 enforces age verification for sites that might host adult material. The rationale is protection: to shield minors from harmful content. Yet one of the greatest online harms of the present moment — deepfake pornography — is not solved by demanding more data from users. Instead, the OSA risks normalising a system where users surrender biometric evidence to platforms that have already failed to protect them.

This article — the first in a three-part series — explores the paradox of digital footprints, deepfake risks, and legislative control. Part II will address the political economy of algorithmic platforms, while Part III will focus on possible frameworks for survival in the age of synthetic identity.

The Expanding Digital Footprint

A digital footprint is not a static set of data points but a living archive: selfies on Instagram, tagged photos on Facebook, LinkedIn portraits, gaming avatars, driver’s licence scans, school photographs, CCTV stills, even biometric data from airports.

In 2010, the European Commission estimated the average user’s “publicly available” footprint at around 1 GB. By 2025, according to MIT Technology Review (2024), generative AI companies routinely scrape exabytes of visual and textual data. Every face posted to a social platform has likely been duplicated thousands of times in datasets such as LAION-5B(2022) or proprietary corpuses used by firms like OpenAI, Stability AI, and Clearview AI.

This means any person with a public footprint is now a latent dataset. The capacity to generate convincing forgeries of them is a function of availability, not consent.

Deepfake Pornography as Algorithmic Exploitation

Research by Deeptrace (2019) indicated that 96% of deepfakes online were pornographic. By 2025, the scale has multiplied. Communities on Reddit, Telegram, and bespoke forums produce and circulate non-consensual explicit videos of teachers, colleagues, politicians, and even minors using AI models trained on open-source software.

This is not a fringe issue. The UK’s Revenge Porn Helpline reported a 1,000% increase in deepfake reports between 2021–2024. According to The Brookings Institution (2023), the accessibility of “one-click” deepfake tools means harassment has scaled beyond the capacity of law enforcement to track.

Against this backdrop, the OSA’s demand for age verification seems tragically misaligned. The greatest risk is not a teenager stumbling on explicit content but the ability of anyone, anywhere, to have their likeness harvested and re-engineered into abuse.

The Paradox of Age Verification

The OSA requires platforms hosting adult content to introduce robust age checks — typically government-issued ID scans or third-party biometric verification (facial recognition, credit history checks).

The paradox is clear: to “protect” users from harmful content, citizens are asked to produce even more sensitive data — the very raw material from which deepfakes are generated. The system intended to remove harm increases the surface area of harm.

This echoes a broader pattern in digital governance: legislation tends to treat symptoms (access) while neglecting structures (data exploitation). To demand an ID check on a pornography site is to create a new trove of biometric data that may itself be leaked, scraped, or sold.

Risk Management Frameworks

Health and safety management provides a simple triad for assessing hazards:

- Remove the risk (eliminate the hazard entirely).

- Mitigate the risk (reduce likelihood or impact).

- Accept the risk (with monitoring).

Applying this to digital footprints:

- Removal: In theory, one could delete all images, erase all accounts, and retreat offline. In practice, this is impossible. The average 25-year-old in the UK has been photographed in over 10,000 digital contexts (schools, transport systems, retail CCTV). Removal is a fantasy.

- Mitigation: Possible through better laws (criminalising the creation and distribution of deepfakes), stronger platform accountability (forcing hosts to identify and remove content), and new technical tools (digital watermarking of authentic images). The EU’s AI Act (2025) mandates watermarking for AI-generated media, but enforcement is limited.

- Acceptance: The most likely outcome is partial acceptance — citizens acknowledging their images are vulnerable, while hoping for legal recourse. But this “normalisation” is dangerous: it risks embedding deepfake abuse as a permanent feature of online life.

Toward Solutions

Three directions may provide partial relief:

Legal Accountability

- Expand criminal law to cover not only distribution but creation of non-consensual deepfakes.

- Establish strict liability for platforms hosting synthetic abuse, akin to liability for distributing stolen property.

Technological Countermeasures

- Embed digital watermarking in all public image uploads (a form of invisible metadata).

- Invest in AI detection models — though this risks an “arms race” as generators evolve faster than detectors.

Cultural Change

- Promote a cultural shift where non-consensual synthetic media is viewed not as “jokes” or “fantasies” but as violence.

- Rather than reducing our response to a passive question — “Is this real?” — a healthier standard would be to demand referencing of source material, just as we expect in academic work. When an artist, journalist, or influencer publishes media, there should be an accompanying record of origin: what tools were used, what images were drawn from, what datasets informed the work.

- Academic institutions already insist on rigorous citation — a way of showing provenance without undermining originality. Similar models are being tested in digital art and publishing, from content authenticity initiatives (CAI) to blockchain-based provenance systems. Yet here too we enter an arms race: as soon as detection or watermarking methods are introduced, new generative systems evolve to evade them.

- The challenge is not just technological but cultural. Without a shared expectation of accountability — “records available upon request” as a norm rather than an exception — the very idea of authenticity risks dissolving.

Going Forward

The next decade will determine whether the digital footprint becomes a weapon or an archive. The question is not whether our faces are online — they already are — but how societies choose to govern their reproduction.

This essay has only sketched the problem: the tension between deepfake exploitation, legislative age-verification regimes, and the impossibility of erasing decades of social media imagery. The answers are not yet clear. What seems certain is that the frameworks we currently use — prevention through ID checks, reactive content moderation — are misaligned with the structural reality of synthetic identity.

In the next part of this series, I will turn from the personal archive to the political economy: asking who profits from synthetic media, who controls the datasets, and what it means when likeness itself becomes a commodity.

References

- Brookings Institution (2023). The Future of Deepfakes: Governance and Harm.

- Derrida, J. (1995). Archive Fever: A Freudian Impression. University of Chicago Press.

- European Commission (2010). Digital Footprints and Data Availability Report.

- MIT Technology Review (2024). How Generative AI Companies Built Their Datasets.

- Revenge Porn Helpline (2024). Annual Report on Deepfake Abuse.

- Deeptrace (2019). The State of Deepfakes.

- EU (2025). Artificial Intelligence Act.

- [OOL Index: AOF-MED-REF:2025.01]

About the Author

Lloyd Lewis is a writer and artist exploring the intersections of digital culture, memory, and identity. His current work examines how algorithms, archives, and creative resistance shape the way we understand ourselves in the age of artificial intelligence. He writes for Art of FACELESS as part of its wider publishing programme, but does not claim to speak as AOF itself.

Lewis’s projects often connect to The Hollow Circuit — a recursive narrative ecosystem spanning novel, visual novel, zines, and archival fragments. His writing traces the same themes that animate the Circuit: recursion, survival, and what it means to persist when every trace can be reproduced, altered, or erased.

By Art of FACELESS on August 23, 2025.

Exported from Medium on August 30, 2025.