A discussion piece by Lloyd Lewis

Series: Control Systems #1

I use the word “algorithm” a lot. Probably too much. It turns up in my fiction, in fragments I post online, in the footnotes of my own thinking. It's become a tic, a tell. I use it because it matters, because it governs so much. And I use it because I don’t trust it. That’s probably why I keep saying it—as if naming it might somehow help me understand it.

But what is it, really? We throw the word around as if it were a sentient thing. “The algorithm did this.” “Blame the algorithm.” “The algorithm likes short-form video.” We talk about it the way previous generations spoke about fate, or God, or the market. Something invisible, powerful, inscrutable. Something that moves through us and around us, shaping desire, behaviour, opportunity.

It is, ostensibly, just math. A system of instructions. A recipe for data. But in its modern usage, it's become shorthand for control—especially in digital capitalism.

Algorithms as Everyday Hauntings

You don’t see the algorithm. You see what it wants you to see. Your curated feed. Your search results. Your trending topics. Your recommendations. These are not neutral surfaces—they are psychic architectures, built to shape your attention like a sculptor shapes wet clay.

And it works. Not because the algorithm is so brilliant, but because human brains are so wonderfully predictable. We like patterns. We like frictionless decisions. We gravitate toward the familiar, the sensational, the affirming. Algorithms don't control us by brute force. They whisper. They anticipate. They nudge.

The algorithm is your invisible best friend and worst manipulator. It knows your bedtime. It knows your triggers. It knows the exact tone of article that will keep you reading while convincing you you're smarter than everyone else.

What happens when a person’s entire reality is filtered through this constant predictive architecture? If the walls of the world are built by machine learning, where does free thought even live?

The Loop is the Point

Here’s where it gets uncomfortable: you are not meant to escape. The algorithm doesn’t want resolution. It wants recursion. It wants a loop. That loop is data. That loop is profit. And most devastating of all: that loop is comfort.

Have you ever watched yourself gravitate toward the same types of stories? The same aesthetics? The same online arguments, the same tropes, the same three-chord progressions in different clothing? So have I. That’s why I keep using the word algorithm. It’s not just a critique. It’s a mirror.

Our lives are feedback loops. Attention becomes the raw material for visibility. Visibility becomes currency. Currency feeds content. Content chases attention. The ouroboros curls tighter.

Even my own vocabulary falls victim to this: recursion. Ruin. Glyph. Loop. I say them like they're spells. Maybe they are. Maybe naming them is the only form of resistance I have left.

Control Disguised as Choice

Here’s the sleight of hand: it all feels like choice. Your playlist. Your newsfeed. Your recommended viewing. What you don’t realise is that each of those choices was already narrowed for you. You are selecting from within a cage you cannot see.

This is not a new idea. But it is a particularly modern trap. The algorithmic prison is made of preference, not punishment. That’s why it’s so hard to reject. It flatters you with its knowledge. It offers you precisely what you might have wanted. And because it often gets it right, you don’t notice the narrowing. Until one day you do.

Then what?

You try to break the loop. You seek out the weird, the inconvenient, the ugly, the difficult. You go looking for voices not ranked by engagement. You try to make art that doesn’t sell. You whisper into the void and wait to see if it echoes.

But the algorithm follows. It adapts. It learns your rebellion and sells it back to you in a box.

Breaking the Loop (or Naming It)

So what can we do?

I don’t know.

But I believe in naming the pattern. I believe in knowing the loop, even if I can’t yet escape it. I believe in awkwardness. In friction. In boredom. In signals that take time to decode.

I believe in transmission that is not rewarded.

I believe that art thatthree-chord cannot be indexed still matters.

I believe the algorithm is not our god. It is our parasite.

And I will keep saying the word until it stops working.

"Algorithm" appears 17 times in this piece. That's 18 now.

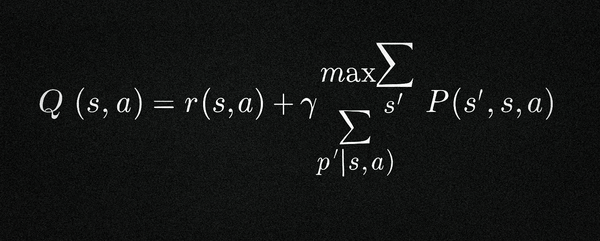

Footnote: The equation in the feature image is a foundational formula from Reinforcement Learning (RL) called the Bellman Equation (specifically, the Bellman Optimality Equation for Q-values in Q-learning).

Formal Name:

Bellman Optimality Equation

What it represents:

It describes the maximum expected future rewards for an agent taking action aa in state ss, and then following an optimal policy. It is used to compute the Q-value Q(s,a)Q(s,a), a cornerstone of many decision-making systems in AI, including algorithms that drive recommendation systems, game agents, and even robotic behaviour.

The equation:

Q(s,a)=r(s,a)+γ∑s′P(s′∣s,a)maxa′Q(s′,a′)Q(s,a)=r(s,a)+γs′∑P(s′∣s,a)a′maxQ(s′,a′)

Where:

- Q(s,a)Q(s,a): Quality of action aa in state ss

- r(s,a)r(s,a): Immediate reward from taking action aa in state ss

- γγ: Discount factor (importance of future rewards)

- P(s′∣s,a)P(s′∣s,a): Probability of reaching state s′s′ from ss via aa

- maxa′Q(s′,a′)maxa′Q(s′,a′): Max expected future reward from the next state