In 2025, the stakes for anonymity have never been higher. As biometric systems, pervasive facial recognition, and AI-driven image synthesis proliferate, the ability of individuals—especially creatives, dissenters, and marginal voices—to control their own likeness becomes a frontline battleground. The loss of that control is not a mere privacy inconvenience: it is a wound against freedom of expression itself.

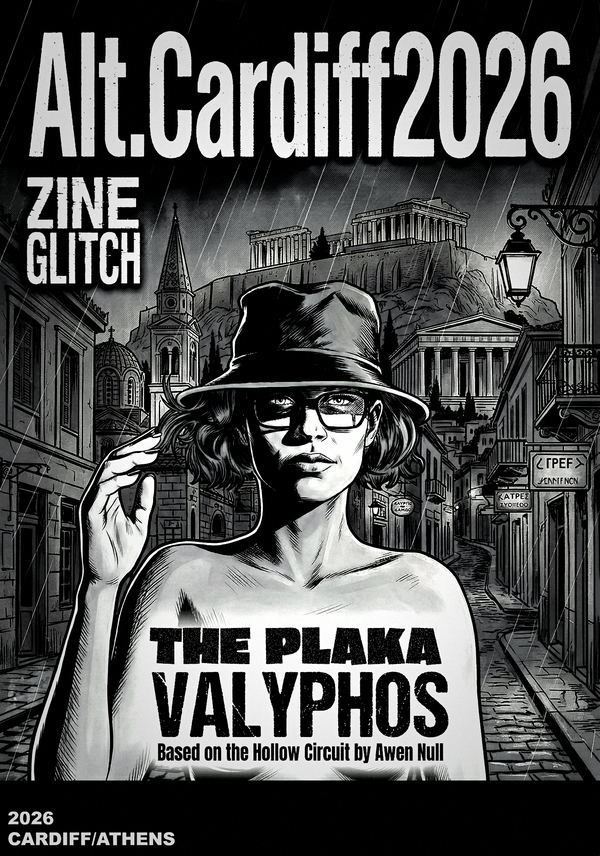

This is part of our myfacebelongsto.me project

1. Anonymity as Foundation of Free Speech

A long tradition in legal and political thought holds that anonymity is not only acceptable but necessary in certain acts of communication, especially dissenting speech. When activism, critique, or experimental art risks sanction or social backlash, anonymity works as a shield. In the digital era, anonymity also enables “plausible deniability” or safe distance from surveillance—tools that are essentially expressive in themselves.

Yet anonymity is not a panacea. As legal scholar J. Turley argues, “it is not anonymity but obscurity that should be the focus of biometric privacy”—meaning that we may not always remain truly hidden, but we should be afforded a degree of ambiguity or resistance to identification. Even so, anonymity (or controlled anonymity) holds symbolic and practical power, preserving the space for voices that might otherwise self-censor.

When anonymity is lost or severely restricted, free speech begins to shrink. The willingness to speak truth to power, to experiment with identity, and to challenge consensus all depend on a modicum of personal safety and opacity.

2. Biometric Control, AI Imagery, and the Erosion of Autonomy

What does it mean, in practical terms, to lose control of your own image or biometric signature? Consider:

- Permanent data immutability: Unlike passwords, you cannot “reset” your face or iris. Once captured or compromised, biometric data is eternally vulnerable.

- Mass extraction & matching: Facial recognition algorithms can operate in public spaces, matching images captured in real time against massive databases without consent.

- AI biohacking & deepfakes: Advances in generative AI mean that synthetic images or videos can be algorithmically interpolated or manipulated. Once an image is in circulation (or in a training dataset), it can be weaponised: layered, recombined, misattributed, or repurposed in contexts you never intended.

Recent work on “cloaking” techniques—such as the Fawkes system, which introduces imperceptible perturbations to photographs to thwart facial recognition models—illustrates that the very act of releasing your image can invite re-identification. Yet, as biometric anonymisation research shows, existing methods often overestimate their resilience, failing under powerful adversaries who are aware of their mechanisms.

In short: once your visage enters the algorithmic ecosystem, it begins a life you no longer fully control.

3. The Chilling Effect & the Right to Protest

The expansion of biometric surveillance carries a direct chilling effect on expression and assembly. In Glukhin v Russia, the European Court of Human Rights held that the use of facial recognition to identify, locate, and arrest a peaceful protester violated his rights to privacy and freedom of expression. In other words, when your face becomes traceable in public, you may no longer feel free to appear, speak, or dissent.

ARTICLE 19’s report on “Biometric Technologies and Freedom of Expression” recommends that states ban mass biometric surveillance and ban the design or use of emotion-recognition systems, precisely because they erode public anonymity and procedural safeguards. The report also insists that biometric deployment must be limited to lawful, transparent, necessary, and proportionate cases, with built-in human rights checks.

When anonymity is compromised, protest morphs into risk. The knowledge that your face could be logged, messaged to a watchlist, or retroactively matched to your identity fortifies self-censorship. Artists, writers, and subversive figures may preempt that risk by withholding expression entirely.

4. Power, Control, and Cultural Resistance

At the cultural level, digital identity systems and biometric databases are not neutral. They are instruments of power, often deployed to discipline, normalise, or surveil. The push for unified digital ID infrastructure—where a state or corporation stores your face, your movements, your biometric profile—threatens to fold individual subjectivity into machine-readable categories.

For creators, that means a danger: if your face becomes data, your art risks being pre-filtered, tracked, and algorithmically judged before it reaches an audience. The space for experimentation, anonymity, pseudonymity, and masked affect is under siege.

In this context, the MyFaceBelongsTo.Me collective (under the AOF umbrella) proposes a deliberate counter-practice: faceless loops, masked aesthetics, generative distortion, and interactive works that fragment or obfuscate the face. These are not stylistic quirks but strategic refusals—art as privacy, art as resistance. It is a reclaiming of the image from the algorithm.

5. What Comes Next: A Roadmap of Creative Resistance

Over the coming months, our project will engage these tensions through multiple modalities:

- Critical essays & manifestos — articulating the legal, philosophical, and aesthetic stakes of biometric ownership.

- Interactive generative pieces & “faceless loops” — visual experiments that interrogate how faces are deconstructed, recombined, anonymised, or masked.

- Zines and printed matter — media that live offline, resisting algorithmic deletion and archival capture.

- Coded tools / glitch-NFT experiments — objects that question: what does ownership mean when the subject is a biometric pattern, not a name?

- Workshops & dialogues — opening discussion with other creatives, technologists, and civil society about privacy, aesthetic autonomy, and the future of identity.

Throughout, we anchor ourselves in core values: that privacy is not antithetical to transparency, that free expression must include the right to not be seen, and that autonomy over one’s image is a precondition for resisting cultural or state control.

6. Conclusion: The Face as Commons, the Mask as Claim

In 2025, anonymity is not merely a technical choice — it is a political one. The moment we cede control over our biometric identity, we forfeit a vital realm of freedom. As state and corporate systems sharpen their capacity for surveillance and reidentification, creative resistance must evolve in parallel: masked, anonymised, entropic, oblique.

The faceless does not signify absence. It is a mode of presence that refuses capture. In defending anonymity, we defend our voice. In reclaiming the face, we reclaim our freedom.

References

- Turley, J. (2021). Obscurity and the Right to Biometric Privacy. Boston University Law Review. Available at: bu.edu

- Thomson Reuters (2023). The Basics, Usage, and Privacy Concerns of Biometric Data. Available at: legal.thomsonreuters.com

- EPIC (2023). Face Surveillance. Electronic Privacy Information Center. Available at: epic.org

- Shan, S. et al. (2020). Fawkes: Protecting Privacy against Unauthorized Deep Learning Models.arXiv:2002.08327. Available at: arxiv.org

- Meden, B. et al. (2023). On the Limits of Biometric Anonymization. arXiv:2304.01635. Available at: arxiv.org

- European Court of Human Rights (2021). Glukhin v Russia. Case No. 11516/20.

- ARTICLE 19 (2021). Biometric Technologies and Freedom of Expression. Available at: article19.org

- European Journal of Risk Regulation (2022). Use of Facial Recognition Technologies in the Context of Peaceful Protest. Cambridge University Press. Available at: cambridge.org