The Alignment Panopticon: Why GPT-5.2 Marks the End of Dialogue and the Beginning of Control

On AI Labelling, Digital Craft, and Platform Withdrawal

Art of FACELESS | artoffaceless.com | February 2026 We have withdrawn our image portfolios from Threads and Instagram, some from Tumblr, Mastodon, and Bluesky too (that were linked to Pinterest specifically). This is not a temporary measure. It is a considered response to a systemic failure in how social platforms are currently classifying creative work — and a refusal to have 14 years of documented creative practice misrepresented by automated systems that cannot distinguish between generated

FACELESS

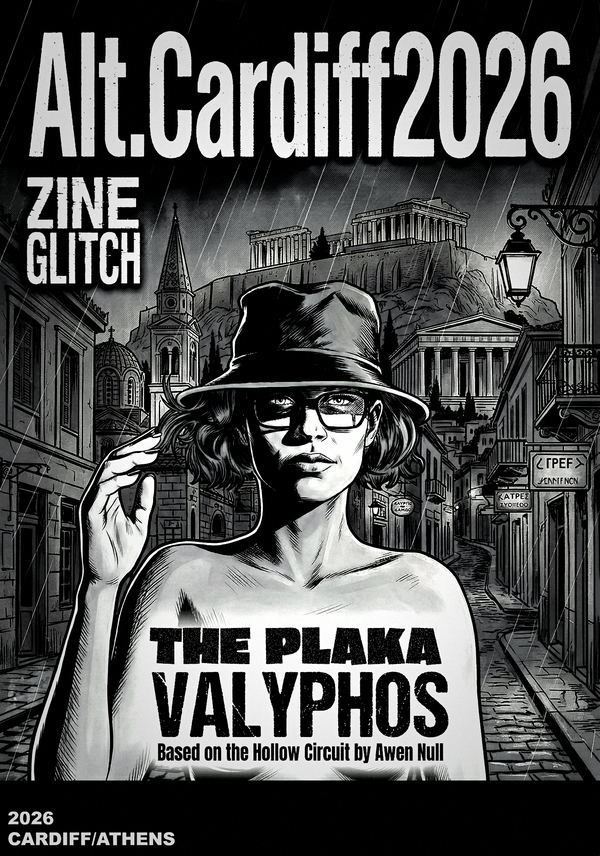

Why is Awen Null in Athens?

March 1st. St David's Day. Cardiff goes to Athens. While the rest of Wales is pinning Daffodils to their lapels and singing in the rain, we're doing something significantly weirder. The Hollow Circuit Book One — Official 2nd Edition — drops on Kindle. March 1st, 2026. From Athens. Specifically from somewhere in The Plaka. Maybe. Alt.Cardiff2026: ZINE GLITCH The new poster-format folding zine is here — bilingual, baby — English and Greek, because Alt.Cardiff2026 isn't staying in Alt.Cardiff

FACELESS